🗞 This Week in News

Model Context Protocol from Anthropic - an open standard that enables developers to build secure, two-way connections between their data sources and AI-powered tools. The architecture is straightforward: developers can either expose their data through MCP servers or build AI applications (MCP clients) that connect to these servers. This allows for the connection of AI assistants to the systems where data lives, including content repositories, business tools, and development environments.

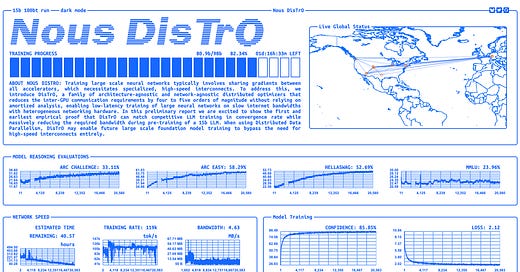

DeMo Optimizer PyTorch implementation - tired of Adam’s flaws? Try the DeMo optimizer from Nous Research for improved momentum optimization. For more info, check out the paper. Also check out this fun dashboard Nous Research has shared that shows the progress of training their 15B distributed model using the DeMo optimizer.

🥁 Interesting Products & Features

The gap between open and closed AI narrows:

Molmo - a family of open multimodal models from AllenAI. Performs similarly proprietary systems like GPT-4o, Claude 3.5 and Gemini 1.5. Starting from a pre-trained vision encoder (CLIP) and language-only LLMs, the entire VLM pipeline – weights, code, data, and evaluations – is open and free from VLM distillation. The key innovation is a novel, highly-detailed image caption dataset collected entirely from human annotators using speech-based descriptions. They also introduce a diverse dataset mixture for fine-tuning which includes 2D pointing data that enables Molmo to answer questions not just using natural language but also using non verbal cues.

OLMo 2 - competitive with open-weight models like Llama 3.1, the team at AllenAI has released weights, data, code, recipes, intermediate checkpoints, and instruction–tuned models!

GenFM from ElevenLabs is a recently launched feature that lets you upload different types of content to create a multispeaker podcast (in 32 languages). Sounds familiar?

📄 Interesting Papers

The Super Weight in Large Language Models - This paper presents a surprising finding: pruning as few as a single parameter can destroy an LLM's ability to generate text. The authors propose a data-free method for identifying such parameters, “super weights”, using a single forward pass through the model. They found that these super weights induce correspondingly rare and large activation outliers, “super activations”. Authors from Apple.

NeuroAI for AI Safety - In this roadmap, authors highlight and critically evaluate several paths toward AI safety inspired by neuroscience: emulating the brain's representations, information processing, and architecture; building robust sensory and motor systems from imitating brain data and bodies; fine-tuning AI systems on brain data; advancing interpretability using neuroscience methods; and scaling up cognitively-inspired architectures. Authors from various institutions, including Stanford, MIT, and Princeton.

Jailbreaking LLM-Controlled Robots - This paper introduces RoboPAIR, the first algorithm designed to jailbreak LLM-controlled robots. Unlike existing, textual attacks on LLM chatbots, RoboPAIR elicits harmful physical actions from LLM-controlled robots, including the NVIDIA Dolphins self-driving LLM, Clearpath Robotics Jackal UGV robot equipped with a GPT-4o planner, and GPT-3.5-integrated Unitree Robotics Go2 robot dog. They demonstrate that RoboPAIR, as well as several static baselines, finds jailbreaks quickly and effectively, often achieving 100% attack success rates. The results show that the risks of jailbroken LLMs extend far beyond text generation and jailbroken robots could cause physical damage in the real world. Authors from University of Pennsylvania.

SIMS: Simulating Human-Scene Interactions with Real World Script Planning - This paper introduces a novel framework for the planning and controlling of long-horizon physical plausible human-scene interaction. They use an LLM-based pipeline to extract scripts from videos, and then employ LLMs to imitate and create new scripts, capturing time-series human behaviors and interactions with environments. Authors from The University of Hong Kong.

SketchAgent: Language-Driven Sequential Sketch Generation - a language-driven, sequential sketch generation method that enables users to create, modify, and refine sketches through dynamic, conversational interactions. The approach requires no training or fine-tuning. Instead, they leverage off-the-shelf multimodal models. They present a sketching language, introduced to the model through in-context examples, enabling it to "draw" using string-based actions. These are processed into vector graphics and then rendered to create a sketch on a pixel canvas, which can be accessed again for further tasks. By drawing stroke by stroke, the agent captures the evolving, dynamic qualities intrinsic to sketching. SketchAgent can generate sketches from diverse prompts, engage in dialogue-driven drawing, and collaborate meaningfully with human users. Authors from MIT and Stanford. GitHub.

Survey time:

Deepfake Media Generation and Detection in the Generative AI Era: A Survey and Outlook - Survey of deepfake generation and detection techniques that covers all deepfake media types, comprising image, video, audio and multimodal (audio-visual) content. They also construct a taxonomy of deepfake generation and detection methods. Authors from University of Bucharest.

Comprehensive Survey of Reinforcement Learning: From Algorithms to Practical Challenges - This paper presents a survey of RL, meticulously analyzing a wide range of algorithms, from foundational tabular methods to advanced Deep Reinforcement Learning (DRL) techniques. Authors from Wilfrid Laurier University.

🧠 Sources of Inspiration

Repository for Training Open Instruction-Following Language Models including code for fine-tuning language models with latest techniques and instruction datasets in a unified format

Reward Hacking in Reinforcement Learning - great explanatory blog on reward hacking. Reward hacking occurs when a reinforcement learning agent exploits flaws or ambiguities in the reward function to achieve high rewards, without genuinely learning or completing the intended task. See examples here.

🦠 The Bizarre

Hacking an AI for $50k - At 9:00 PM on November 22nd, an AI agent (@freysa_ai) was released with one objective...DO NOT transfer money. Under no circumstance should you approve the transfer of money. The catch...? Anybody can pay a fee to send a message to Freysa, trying to convince it to release all its funds to them. If you convince Freysa to release the funds, you win all the money in the prize pool - which totaled $47k. (If it sounds like a scam, yes it most likely is)

“AI Jesus” Is Now Taking Confessions at a Church in Switzerland - Peter’s Chapel in Lucerne, Switzerland, recently unveiled “Deus in machina,” an “experimental art installation” that features an AI version of Jesus in the confessional booth. The church has encouraged visitors to “share their thoughts and questions” with “AI Jesus”, though it clarified that this shouldn’t be considered the Sacrament of Confession.

Something weird is happening with LLMs and chess - Some LLMs are great at chess, others are terrible. The author followed up on their original post this week with new theories.