NVIDIA GTC is being heralded by the news as the “Woodstock of AI” (yes, seriously). I am here to tell you that it was much, much better than that (if you don’t know the actual story behind Woodstock, it is pretty crazy and not all that it is remembered to be). While it did include an impressive gathering of people, music (generated by AI, of course) and not quite enough food, GTC was in fact a fantastic gathering of brilliant minds to share learnings about AI applications (in addition to a whole lot of sales and marketing).

I had an incredible time making new friends, catching up with old friends, and learning new perspectives on AI.

I also made it on the news! Check out my interview with ABC7, below:

I wanted to share a few takeaways from the pages of notes I took throughout the event. I’ll also add some notes about the event itself.

Takeaway #1 - UX is key.

Session after session, people talked about the UX of AI, how it’s important, and how it will be the differentiator for a lot of applications.

How will we interact with agents in the future? Some things suggested at GTC include adding rewind/edit capabilities (“human on the loop vs in the loop”) and a managerial-style approach to agent interaction (agents running in the background, requiring human review before proceeding for advanced tasks).

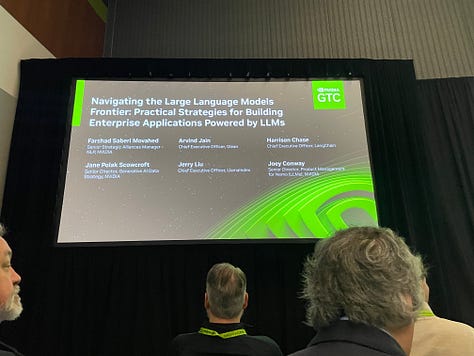

Harrison Chase (CEO Langchain) is pretty excited about Cognition’s Devin’s rewind and edit feature and hinted we may see similar functionality in Langchain’s offering in the future.

Arvind Jain (CEO, Glean) is all about speed - he said “people don’t want to wait, 10 seconds is too much”. He suggests speed vs. accuracy is a user experience question and one we need to be thoughtful about.

In a panel of “Attention is All you Need” authors, when asked how to make AI better, they brought up improving interactions with users.

Takeaway #2 - We need better benchmarks for AI.

The general consensus is that evaluations are currently lacking.

Percy Liang (Stanford Prof, Cofounder Inflection AI) believes we need to standardize and have transparency with evaluations of LLMs.

We are bad at transparency in AI - we need more transparency and openness in model building.

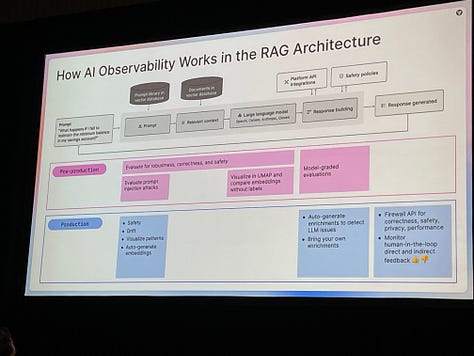

Krishna Gade (CEO, Fiddler) has built an entire product around improving observability in RAG + providing ways to evaluate models in deployment.

Takeaway #3 - People are thinking deeply about AI + using it to creatively express themselves.

Vanessa A Rosa (AI Artist) talked about her use of AI in her art and how AI changes how we understand ourselves and our relationships with our history.

Is latent space trained on Internet data a representation of human consciousness?

Amy Karle (AI Artist) is using AI in biomimicry-based art, from fashion to performances to biofeedback (check out one of her pieces, below).

Movies are being created with AI. Ed Ulbrich (CCO, Metaphysic) shared details about how AI is helping create movies that previously wouldn’t exist. “Here”, starring Tom Hanks, will be coming out this fall. It follows a family throughout their life, requiring a lot of simulated age changing. Interpolative aging of characters at this level would not be possible with current visual effects and CGI but is possible with AI. Metaphysic’s impressive technology is behind a lot of viral content and they were on America’s Got Talent (check it out). Importantly, they are advocates for actors and creatives and are being thoughtful about the use of their technology in the movie industry.

Takeaway #4 - Small models FTW.

The authors of “Attention is All you Need” talked about how it is very computationally expensive to run LLMs on simple tasks (for example, 2+2 is simple on a calculator but takes potentially billions of calculations to run when asked via an LLM).

Arthur Mensch (CEO, Mistral) is making a bet that small models are better. And the Mistral models are pretty impressive, so it looks like that bet is already paying off.

Many people, including Percy Liang (Stanford Prof, Cofounder Inflection AI), believe that some amount of retrieval is critical because things change over time (ie codebases change, search results change, products change).

Takeaway #5 - We are still learning and there is SO much to figure out.

The authors of “Attention is All you Need” do not believe the transformer is the ultimate model - they are still seeking something that is a “step function better”.

A lot of stuff people are currently excited about (mixture of experts, state space models) were first done before the transformer architecture. (So perhaps we need to look at our past to find more model optimizations?)

What is a replacement for gradient descent?? The authors of “Attention is All you Need” believe a lot of the issues with RNNs may actually come back to gradient descent not being the best way to train them.

Percy Liang (Stanford Prof, Cofounder Inflection AI) believes that we are at a “critical juncture” in the field of AI.

The Event

Keynote

The Keynote hit on topics like the history of AI (Alexnet and cats), robots (including appearances from Disney robots), digital twins of products, and, of course, the unveiling of the new Blackwell GPU. Watch the keynote here (definitely recommend the first ~30 mins and the last ~30 mins). The AI art in the beginning was pretty stunning to see in SAP Arena with the lights synced to the visual. The arena was packed - people were sitting on the stairs and there were overflow rooms, to give you a sense of the scale of the conference (the arena holds ~17k people!)

The Food

Lunch at the conference was served in a massive tent (like the kind used for sport events). I have a picture above, which shows about half of the tent, but the scale is hard to grasp from a photo. Dessert and drinks were served in the Exhibit Hall. There was a robot serving up drinks (see above) and a candy bar. On Tuesday we went to an event called “Dinner with Strangers”, where we got to meet new people over dinner.

The Meetups

The events don’t stop with the conference - many companies host evening socials. I went to a few, but my favorite was GPU Pour, an event put on by the VC firm Basis Set. It was fun meeting new people across different fields at these events.

The Swag

I sadly did not get a GPU in my swag bag. Highlights include a vulture plush from Vultr, a yoyo from Lambda, and a beanie from Microsoft Azure. Google Cloud was printing T-shirts at the event, too, which was entertaining to watch.

Thanks!

Thanks to my good friend Mark Feng for convincing me to come to NVIDIA GTC, being my early morning pre-conference running partner, and sharing a bunch of ideation around the future of AI. Also thanks to Chang Xu for the great conversation. It’s not often you get a startup founder, a professor, and a VC in the same room 🚀

What a great trip! Awesome recap 🤩