🗞 This Week in News

And the Nobel Prize goes to:

The Nobel Prize in Physics 2024 is all about machine learning. It was awarded jointly to John J. Hopfield and Geoffrey E. Hinton "for foundational discoveries and inventions that enable machine learning with artificial neural networks".

The Nobel Prize in Chemistry 2024 is about proteins. David Baker for building entirely new kinds of proteins and Demis Hassabis (Google DeepMind) and John Jumper (Google DeepMind) for their development of an AI model (AlphaFold 2) to solve a 50-year-old problem: predicting proteins’ complex structures.

An article from Anthropic’s CEO outlines potential benefits of AI, including in biology, economic development, governance, work, and meaning.

🥁 Interesting Products & Features

Inquisite - More than a search or research tool, Inquisite thoroughly researches any complex topic, finds insights based on trusted, credible sources, and helps you build research-backed documents. I have had the opportunity to test this tool prior to launch and I have loved using it for both academic research and product discovery. Inquisite is familiar and intuitive while also being incredibly powerful. Launched this week by Duke professors Jon Reifschneider and Pramod Singh.

Neuromnia - Neuromnia provides clinicians, parents, and teachers with powerful AI-driven tools that enhance productivity, improve treatment quality, and increase access to care for individuals on the autism spectrum. ‘Nia’, powered by Llama 3.1, is a human-centric AI co-pilot for Applied Behavior Analysis (ABA) therapy.

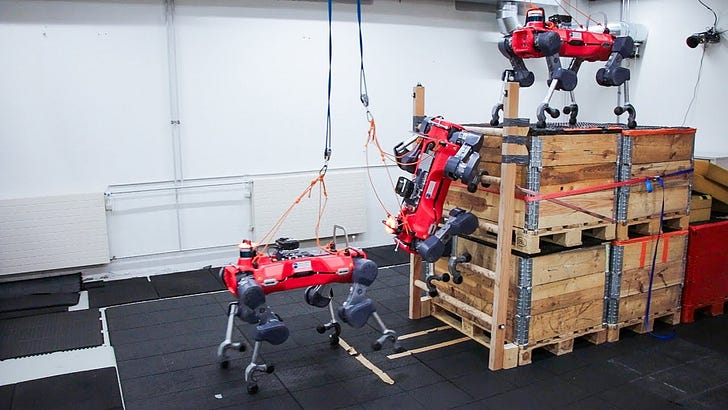

Four-legged robot learns to climb ladders - Ladders have remained a challenge for robots. Recently ETH Zurich spinoff, ANYbotics, used the ANYMal robot and reinforcement learning, which helps the system adjust to the peculiarities of different ladders, to overcome this challenge. They show an impressive 90% success rate and climbing speed increase of 232x versus current “state-of-the-art” systems.

📄 Interesting Papers

Differential Transformer - Transformer tends to overallocate attention to irrelevant context. To remedy this, this research introduces DIFF Transformer, which amplifies attention to the relevant context while canceling noise. The differential attention mechanism calculates attention scores as the difference between two separate softmax attention maps. The subtraction cancels noise, promoting the emergence of sparse attention patterns. DIFF Transformer outperforms Transformer in various settings of scaling up model size and training tokens and offers advantages in practical applications, such as long-context modeling, key information retrieval, hallucination mitigation, in-context learning, and reduction of activation outliers. Authors from Microsoft Research and Tsinghua University.

Fine-Tuning CLIP's Last Visual Projector: A Few-Shot Cornucopia - When fine-tuning CLIP, the authors show that simply fine-tuning the last projection matrix of the vision encoder leads to strong performance compared to the existing baselines. They show that regularizing training with the distance between the fine-tuned and pretrained matrices adds reliability for adapting CLIP through this layer. They call their approach “ProLIP”. Authors from Inria, Valeo.ai, and Kyutai.

nGPT: Normalized Transformer with Representation Learning on the Hypersphere - This paper introduces the normalized Transformer (nGPT) with representation learning on the hypersphere. In nGPT, all vectors forming the embeddings, MLP, attention matrices and hidden states are unit norm normalized. The input stream of tokens travels on the surface of a hypersphere, with each layer contributing a displacement towards the target output predictions. These displacements are defined by the MLP and attention blocks, whose vector components also reside on the same hypersphere. Experiments show that nGPT learns much faster. Authors from NVIDIA.

Natural Language Counterfactual Explanations for Graphs Using Large Language Models - Counterfactual explanations are a promising explainable AI (XAI) approach. However, these “what-if” explanations are frequently complex and technical, making them difficult for non-experts to understand. To bridge this gap, in this work, the authors use open-source LLMs to generate natural language explanations when prompted with valid counterfactual instances produced by SOTA explainers for graph-based models. Authors from Sapienza University of Rome.

🧠 Sources of Inspiration

Three Subtle Examples of Data Leakage - this is an article with examples of data leakage impacts (for all my students)