Highlights from the Duke AI Hackathon

There has not been a lot of GPTea this week and the Duke AI Hackathon happened over the weekend, so this edition will focus on some highlights from the hackathon instead of news. Next week we will be back to our normal scheduled programming.

A few fast facts: the 2nd annual Duke AI Hackathon was held this past weekend (Nov 1-3). Students hacked for ~48 hours across 5 different tracks: Education, Health & Wellness, Productivity & Enterprise Tools, Art, Media, & Design, and Social Impact & Climate. They were judged based on Technical Accomplishment, Novelty, and Value Proposition. There were 20 judges from the community, including judges from big tech, startups, and VC. Each track had one winner and these winners then competed for the grand prizes.

Check out a few of the incredible projects completed in 48 hours (yes, 48 hours!) below. I have highlighted a few that I really enjoyed but I have definitely missed some awesome ones. Be sure to visit the devpost and check them all out.

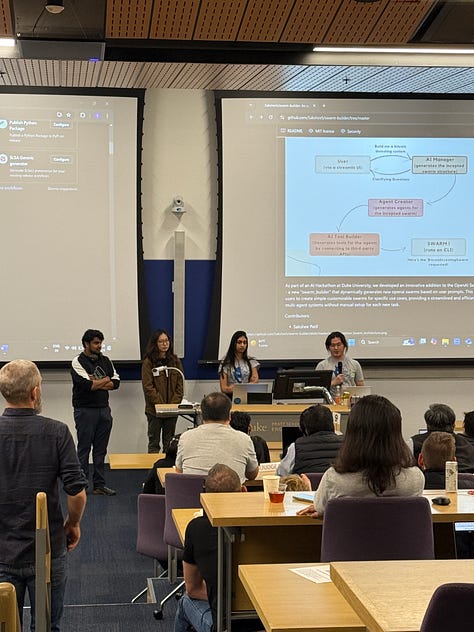

Inception Swarm 🐝

🏆 Grand Prize Overall Winner

🏆 Enterprise Tools and Productivity Track Winner

🏆 Special prize: Best use of AI agents in hack

Builds customized swarms based on natural language input. By understanding the user’s goals and requirements, Inception-Swarm assembles a set of agents specifically designed to handle the requested tasks. Check it out here.

Bobcat 🎵

🏆 Second Place Overall Winner

🏆 Arts, Media, and Design Track Winner

Bobcat is not just a gadget—it's your personal, empathetic AI friend that listens, responds, and creates songs tailored to your emotions and personal story. Real-time, multimodal sentiment analysis paired with song generation offers a uniquely interactive, personalized experience. See it in action here.

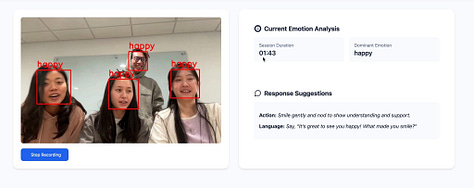

Alex and Mia 😨

🏆 Third Place Overall Winner

🏆 Health and Wellness Track Winner

Helps people with Alexithymia (aka emotional blindness) perform better in the workforce and foster collaborative inclusiveness. They developed a real-time emotion-processing app that provides social interaction suggestions. Alex and Mia can identify the emotions of people the users are interacting with and provide suggestions on how users can respond with both languages and actions. Check it out here. (Also note their Responsible AI slide 🩷👏)

Flashback 🔄

🏆 Education Track Winner

FlashBack is an AI assistant that travels with you to refresh your memory. The way it works, is by recording footage from your point of view, sending it to the cloud. At a future time, when you need to recall events of the past, a web interface is available for you to ask questions about who you met, or important information about people you talked to. Try it here.

sustAInData 🌳

🏆 Social Impact & Climate Track Winner

A chatbot designed to provide comprehensive consulting services, enabling customers to build and manage data centers effectively while addressing the existential threat of climate change. It tracks and monitors real-time progress toward ESG goals, ensuring continuous alignment with sustainability efforts. Check it out here.

Evalon 💬

🏆 Special prize: Best LLM evaluations

A B2B platform that allows enterprise clients to automatically stress test their LLM before they deploy it to production. When users provide high-level details about tasks they want to test, we generate multiple "tests" (i.e. user task, chatbot goal, user persona) and simulate a live conversation between a synthetic user and the client's chatbot. We then evaluate the generated chat history on task completion, friendliness, repetitiveness, etc., and provide a dashboard to monitor results. Check it out on devpost here and try it here.

While they may not have won a prize, there were many others I thought were really cool:

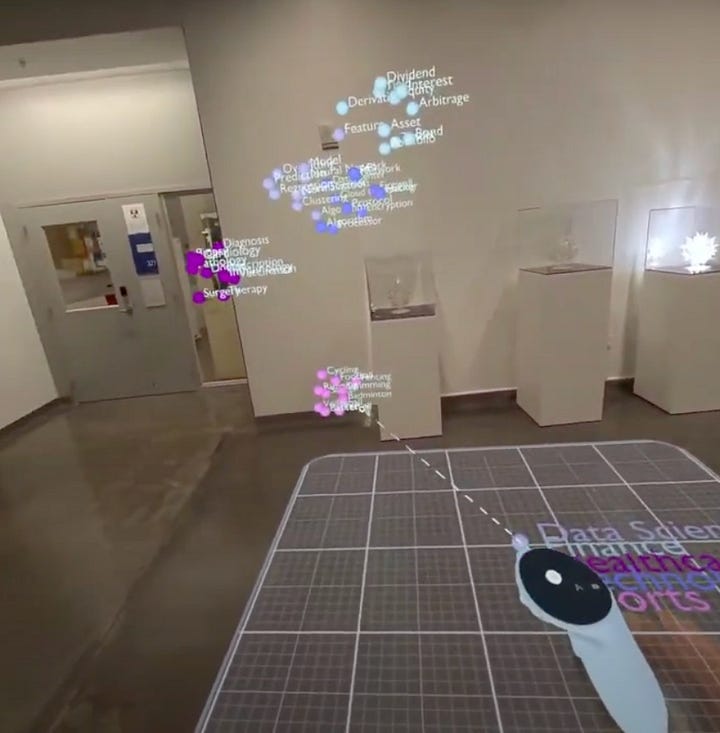

PlotVerseXR 🥽

The fundamental limitation of 2D screens - trying to compress three dimensions into two - has always forced us to sacrifice either information or clarity. Their platform breaks free from these constraints, transforming raw datasets into immersive XR visualizations using nothing but a simple natural language prompt. See it here.

Mux 🗣️

Mux is an AI language partner, who's available at the press of a button. Anyone with a phone can access it at any time. Users call in and engage in real-time dialogue. Mux can offer real time news, play games to deepen understanding, and practice live speaking scenarios to expand your vocabulary. It listens, responds, and adapts—offering feedback that helps you improve with every interaction. Mux turns language learning into a human experience, accessible anytime, anywhere. I love their trailer (watch it below). Project on devpost here.

Music Transcriber 🎤

An app that allows you to hum or vocalize a recording and then translate that recording into a MIDI (Musical Instrument Digital Interface). You can then assign an instrument to this MIDI and use your resulting music for personal or professional reasons. Check it out here.

ImproViz 📝

ImproViz was inspired by the need to make lectures more engaging and effective for visual learners. The idea was born out of observing how traditional note-taking and learning tools often fall short in providing interactive and visual representations of complex topics. The goal was to create a tool that bridges this gap, turning spoken words into meaningful illustrations. Check it out here. Watch the demo showing their near real-time visualizations below.

Air Piano X 🎹

Air Piano X is a digital piano interface designed for ultimate portability. Using motion sensors and haptic feedback, Air Piano X allows users to play a virtual piano by simply positioning their hands as they would on a traditional keyboard. The system translates hand and finger movements into musical notes in real time, giving users an authentic piano-playing experience without a physical instrument. See it in action here!

MagicUp 🪞

MagicUp transforms makeup applications into an effortless, personalized innovative “magic mirror” experience. The mirror looks and functions as a regular mirror until activated by a hot word. The user can then specify their desired makeup style, and a customized open-source face parsing model and OpenAI APIs generate a virtual version of their face with that makeup applied. The virtual makeup is then displayed on the screen behind the half-silvered mirror, allowing the user to see both their real face and the AI-suggested makeup overlay at once. This synchronized display enables the user to “trace” the AI-applied makeup onto their own face, effortlessly following each feature for precise, professional results.

And there were many more that I didn’t get the chance to see over the weekend. You can check them all out here!

A special thanks to the incredible student organizers who put this together - Jared, Suneel, Ritu, Abhishek, John, Harshitha, Hung Yin, and Violet. Jon Reifschneider and Vivek Rao were our fearless leaders and Amanda Jolley made sure nothing started on fire (while simultaneously making sure everything was 🔥). And thank you to the judges who volunteered their time with us on Sunday.

FOMO? Join us next fall as a participant, volunteer, judge, spectator, or cheerleader! 📣