NVIDIA GTC Day 1 did not disappoint. The Keynote hit on topics like the history of AI (Alexnet and cats), robots (including appearances from Disney robots), digital twins of products, and, of course, the unveiling of the new Blackwell GPU. Watch the keynote here (definitely recommend the first ~30 mins and the last ~30 mins). The AI art in the beginning was pretty stunning to see in SAP Arena with the lights synced to the visual. The arena was packed - people were sitting on the stairs and there were overflow rooms, to give you a sense of the scale of the conference.

Also got to meet interesting people building things - from pathology AI tools to object detection for international security.

🗞 This Week in News

🤖 Impressive results combining Figure’s humanoid robot tech with OpenAI language. They are approaching human speed. Twitter thread here. (They are hiring!)

Crafting LLMs into Collaborative Thought Partners. A great series on approaches for getting LLMs to do what you want them to do (by my friend Rob Grzywinski).

🥁 Interesting Products & Features

Grok-1 released - xAI released the weights and architecture of their 314 billion parameter Mixture-of-Experts model custom trained on top of JAX and Rust.

Midjourney is testing a “consistent characters” feature. The new algorithm can use the same character across multiple images and styles.

📄 Interesting Papers

MM1: Methods, Analysis & Insights from Multimodal LLM Pre-training: Apple joins the multimodal LLM game, sharinga family of multimodal models up to 30B parameters, consisting of both dense models and mixture-of-experts (MoE) variants, that are SOTA in pre-training metrics and achieve competitive performance after supervised fine-tuning on a range of established multimodal benchmarks. Authors from Apple.

🪱 ComPromptMized: Unleashing Zero-click Worms that Target GenAI-Powered Applications: The authors created a generative AI worm (“Morris II”) via a “adversarial self-replicating prompt”. This worm targets GenAI-powered applications. They demonstrate the worm’s ability to spam and exfiltrate user data. Authors from Cornell Tech and Intuit.

Evil Geniuses: Delving into the Safety of LLM-based Agents: This paper conducts a series of manual jailbreak prompts along with a virtual chat-powered evil plan development team to thoroughly probe the safety aspects of LLM agents. Authors from NVIDIA.

Branch-Train-MiX: Mixing Expert LLMs into a Mixture-of-Experts LLM: This paper proposes an approach to training MoE LLMs: expert models are asynchronously trained and then their feedforward parameters are brought together as experts in Mixture-of-Expert (MoE) layers and the remaining parameters are averaged, followed by an MoE-finetuning stage. Authors from Meta AI.

SemCity: Semantic Scene Generation with Triplane Diffusion: 3D diffusion model for semantic scene generation in real-world outdoor environments. Authors from KAIST.

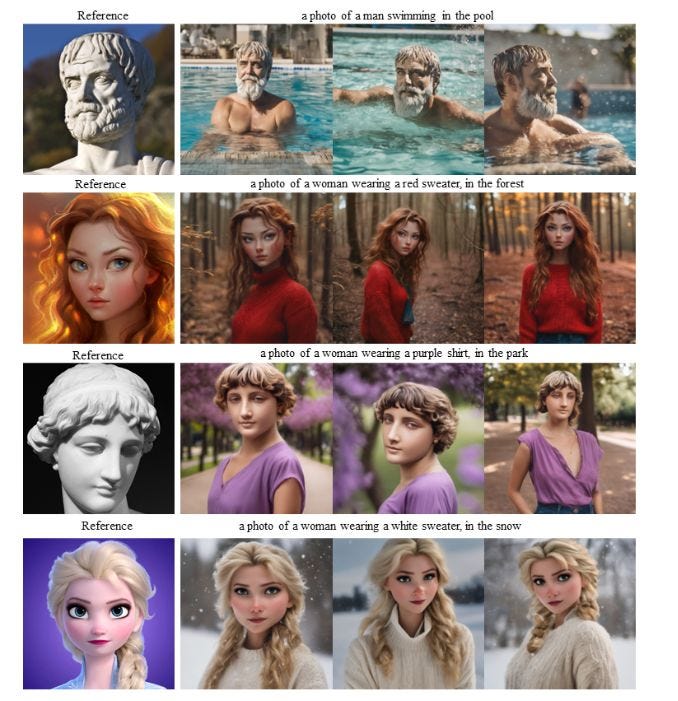

Infinite-ID: Identity-preserved Personalization via ID-semantics Decoupling Paradigm: This paper introduces identity-enhanced training, incorporating an additional image cross-attention module to capture sufficient ID information while deactivating the original text cross-attention module of the diffusion model. This ensures that the image stream faithfully represents the identity provided by the reference image while mitigating interference from textual input.

🧠 Sources of Inspiration

Raleigh Durham Startup Week April 9-12 - registration is free! Workshops, networking, and office hours.